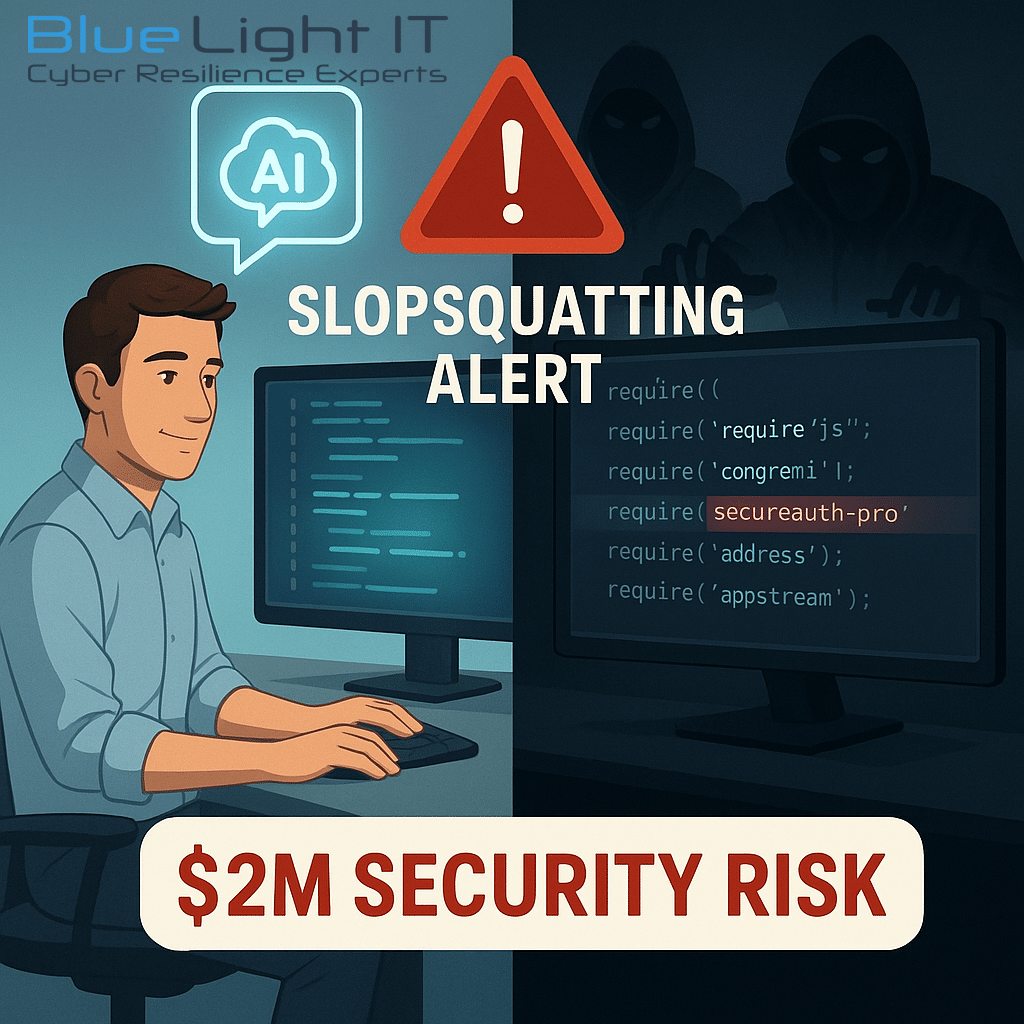

How Slopsquatting Exploits AI Hallucinations to Compromise Your Code

In my 20+ years leading cybersecurity initiatives, I’ve witnessed numerous attack vectors emerge, but slopsquatting represents a uniquely dangerous evolution targeting AI-assisted development workflows.

Even if you’re not writing code yourself, your internal or outsourced developers might be exposing your business to serious risk, without even realizing it.

Understanding Slopsquatting: The New Supply Chain Vulnerability

Slopsquatting targets the gap between AI imagination and reality in modern development practices. As “vibe coding”, where developers describe functionality and let AI generate code, becomes standard practice, slopsquatting exploits have increased by 300% in the past six months alone.

Here’s why slopsquatting is so effective:

When AI tools suggest code libraries (also called “packages”) that don’t actually exist, attackers register those names and embed malware inside them. Developers unknowingly install these fake but functional packages, completing the attack without realizing it.

The Slopsquatting Attack Chain Explained

- Your AI assistant suggests importing a non-existent package (e.g.,

authlock-pro) - Slopsquatting actors quickly register that fictional package in public repositories

- Developers install these packages, unwittingly enabling the attack

- The malicious package works as expected, while quietly stealing data or creating backdoors

Recent security research revealed that slopsquatting opportunities exist in 5.2% of commercial AI coding suggestions and 21.7% of open-source model outputs, making slopsquatting one of the fastest-growing security threats in modern development.

Why Slopsquatting Succeeds in Business Environments

- These malicious packages often come with professional-looking documentation and GitHub pages

- Standard antivirus and security scans often miss them, since they appear functional

- Attackers specifically target packages related to authentication and data handling

- Developers trust AI recommendations, assuming the tools are safe by default

Defending Against Slopsquatting: A 4-Step Framework

If your company builds or maintains software, even through contractors, here’s how to defend against slopsquatting:

- Validate Every New Package — Require verification of every dependency against official registries

- Use Detection Tools — Tools like Socket and Snyk now scan for hallucinated or suspicious packages

- Train Your Team — Developers and IT staff need to know what slopsquatting looks like

- Control Package Sources — Use internal package repositories that only include vetted libraries

Real-World Slopsquatting Attempts

Last quarter, our security team caught three instances of slopsquatting targeting a financial application in development. The fake packages were sophisticated enough to bypass traditional security checks, our advanced AI-specific monitoring was the only reason we caught them before deployment.

What This Means for Business Leaders

You don’t need to ditch AI development tools, but you do need to build awareness and prevention into your workflows. This is a new kind of supply chain risk, and it affects every business using AI-generated code or outsourcing development.

Blue Light IT helps businesses integrate AI securely, validating every component before it becomes a threat. If you’re using AI in your development process, or plan to, you need a partner who understands both the opportunity and the risk.

Frequently Asked Questions (FAQ) About Slopsquatting

– Look legitimate

– Include documentation and working functionality

– Bypass traditional security scans

This makes them highly effective at infiltrating systems without raising alarms, especially in fast-paced development environments where AI is heavily relied on.

– 5.2% of commercial AI coding suggestions include references to non-existent (hallucinated) packages.

– 21.7% of outputs from open-source AI models contain these hallucinations.

Attackers are actively monitoring these suggestions and registering the fake packages, making this a rapidly growing threat.

– Uses AI-assisted code generation (e.g., GitHub Copilot, ChatGPT)

– Allows developers to install packages without validation

– Relies on third-party or outsourced development teams

is potentially vulnerable. This applies to startups, SMBs, and enterprises alike.

1. Validate Packages: Ensure all new packages are verified against official registries.

2. Use Detection Tools: Tools like Socket and Snyk can flag suspicious dependencies.

3. Educate Developers: Train your teams to recognize and question unknown packages.

4. Restrict Sources: Use internal, vetted package repositories to control what gets installed.

Related Posts

Three Years on CRN’s Security 100: What It Actually Means for Your Business

read more

Law Firms Beware: The Silent Ransom Group is Calling

read more